Table of contents

Summary

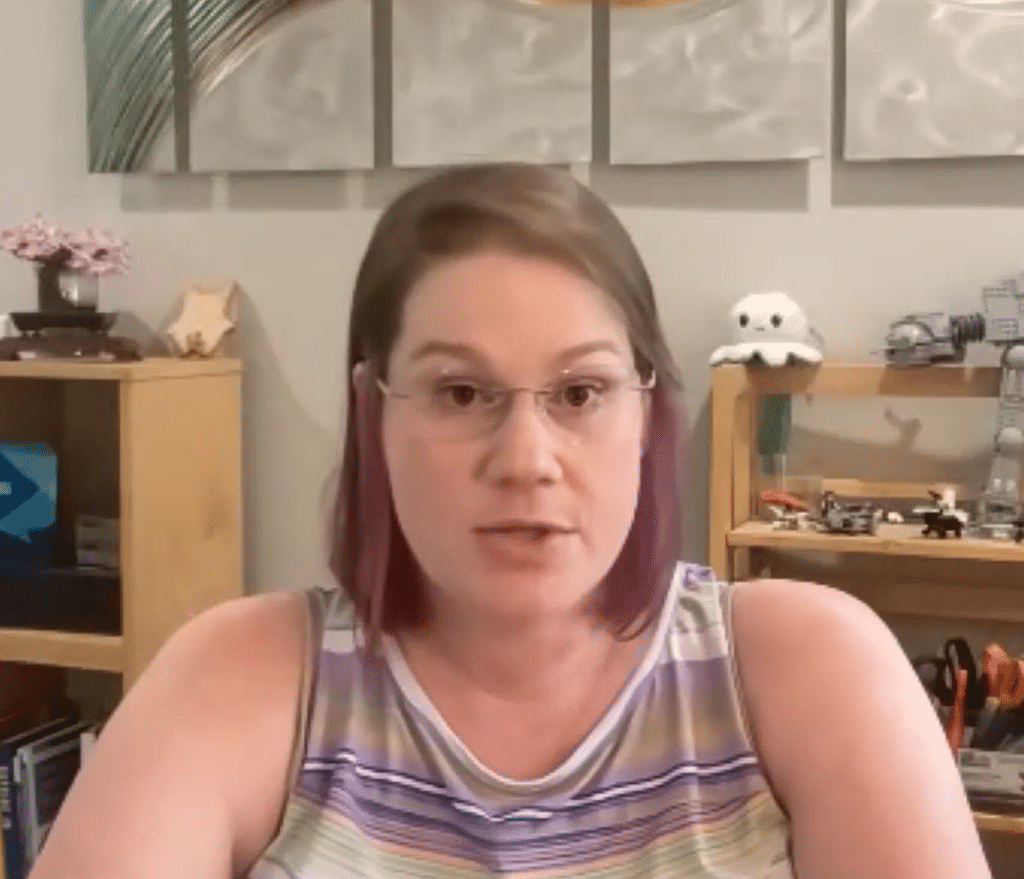

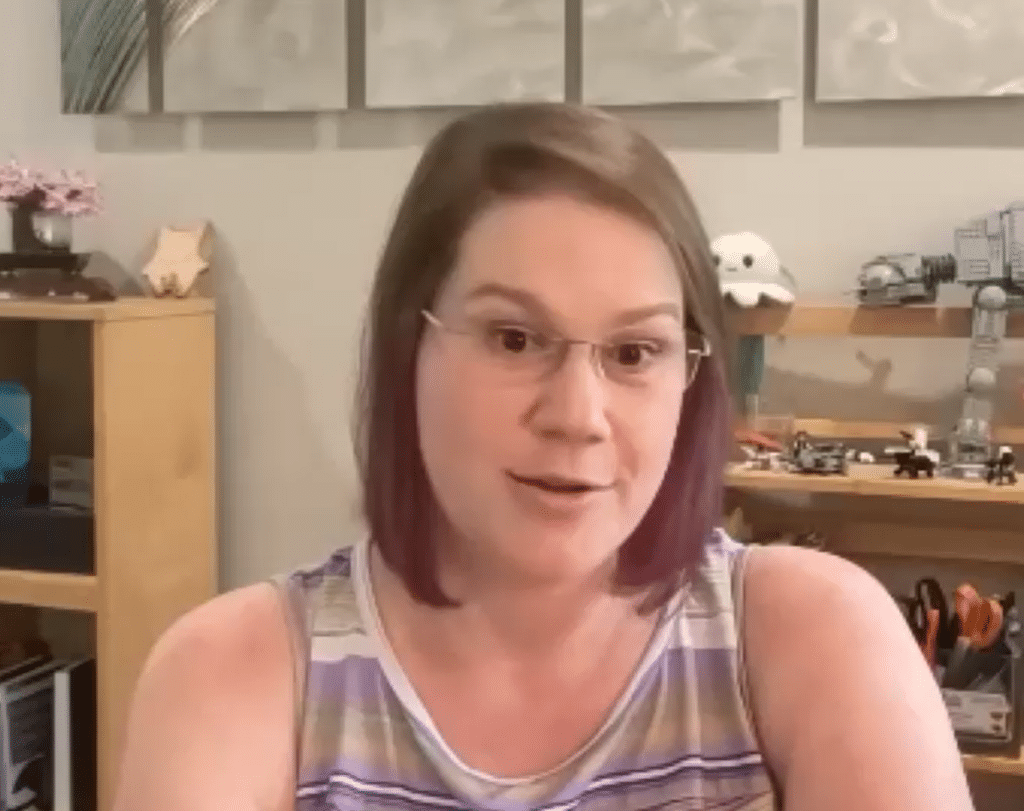

How do you bring a user-centric mindset and best practices to one of the world’s largest manufacturers? That’s the challenge facing Ki Aguero, who is an “army of one” running UX insights for the online business unit at one of the world’s top car companies. Ki is a longtime UX veteran; she previously led UX research at one of the world’s largest retailers, and before that was a researcher and solutions consultant at UserTesting.

In her new role, Ki is focusing heavily on ways to make sure her insights are translated into action, and how to empower non-researchers to gather insights safely and appropriately. We talked with her about her best practices and what she’s learned from driving user-centric processes in legacy companies. Some of her key points were:

- To make sure the company takes action on your insights, focus on the outcomes you are trying to drive, and don’t be shy about self-promotion.

- Make it very convenient for people to consume your insights in small bites.

- When scaling research to non-researchers, focus on getting the test plan right.

- Train non-researchers at the moment when they need to do research. Abstract training ahead of time won’t stick in their heads.

How to get the company to act on your insights

Q. A question I hear frequently from UX researchers is, “how do I make my company take action on my insights?” You did a speech on that at this year’s Insights Innovation Expo. Can you give us a summary of what you discussed?

A. I’ve been thinking a lot about compassion plays; about making sure that your insights turn into action, so they don’t just sit on a shelf and gather dust.

I know there are certain kinds of research that are ongoing programs and it’s a constant machine of research that’s monitoring customer sentiment and that kind of thing. I totally respect that. And I recognize that this advice doesn’t apply to those programs. But a big thing for me is, if I’m going to do UX research, I want to know what decision you aren’t making until you have this research in hand.

Every time that a product manager or a designer comes to me they need to make a call, and they’re not feeling good about making the call without research. So I ask the question up front: What decision are you trying to make? What action are you trying to take?

They often have a little trouble articulating that at first. Sometimes they get caught off guard when I ask that question, but I’ll rephrase it. Most of my kickoff calls feel like a therapy session anyway: “You tell me where it hurts and you tell me how you’re struggling. And then I’ll talk about some tactics that I have that might help you with that.”

Coming out of a kickoff call, I know what decision they need to make. Maybe it’s “I need to feel more confident about the design before I move forward.” Or “I need to know if there are any changes to make before it carries on.” Or “I need to know if this is worth investing in before committing it in the roadmap.”

Once I know that, I have an outcome section at the end of my test plans. Almost every researcher has a test plan, it’s like the backbone of their project. They use it to articulate the objectives of the study and the participants and the method. Mine ends with an outcome section: At the end of all of this work that I’m going to do for you, here’s what we’re going to do as a result. That looks different every time, but it might be “if more than 50% of users say the right answer or understand this concept, then we’ll move forward with incorporating this piece of information into the menu.” Or, if the result is vice-versa, then we’ll need to have more discussions about it.

I’m giving general examples, but they usually are somewhat specific outcomes coming out. That’s a really important one. If you don’t want research to sit on a shelf and never be touched, that’s a super important thing. Commit to action before you even agree to do research.

Make your insights snackable

The other thing that I have tried and found success in is making sure that the insights are really snackable. By that I mean brief, so they’re easy to consume. I can eat a handful of them and keep going. I can have a little nibble and go about my day.

Snacks are also just handy. I can go find it if I need it. We all have tools that we use on a regular basis. Product managers are in PowerPoint a lot in my experience; they have to do slides to communicate up and to communicate out about what they’re doing in the quarter. So if I’m delivering results to them, I’m trying to make a single slide or two slides that they can then bake into their reports or their readouts that says, “we did the user research and it indicates this and this is the direction that we’re going.”

If my designers ask for research, and I’m not having them do it themselves, that’s different. I go nuts on the visuals with designers. Screenshots of every page and little callouts with color coding because that is how they talk. That is how they absorb things. And I usually will do more of a placemat style. So it’ll be on either a Miro board that’s just for research for them, or it’s like a Figma page within their Figma file.

No one except for researchers wants to read big decks. We love them, but everyone else wants to nibble on insights and then they have to get back to their actual job.

I try to put the research adjacent to the tools that they use every day, so that they actually go look at it instead of, “Ugh, where the hell was that? I gotta go log into this thing I never use and then I gotta go hunt it down and then go get it out of the slide deck. And I haven’t opened PowerPoint in a month and a half, so it’s outta date. Nevermind, I don’t need the research that badly.”

It doesn’t meet them where they’re at. It’s not snackable. It’s not consumable. So the results go unused.

And then, for sharing kind of a cross-org and then especially with leaders, I have found video really handy. Like, “here, watch this woman encounter three usability issues inside of two minutes. You’ll love it.” I try to make it really short. You can fit this in before your next meeting. I throw it into chat, just try to make it a little bit clickbait, a bit more consumable.

No one except for researchers wants to read big decks. We love them, but everyone else wants to nibble on insights and then they have to get back to their actual job. For better or worse.

It’s time for shameless self-promotion

The last thing about turning research into action starts with a story. When I got my first corporate job, self-evaluation time came around and I asked my manager, what is the tone here? Like, do I need to be super critical? Do I need to be hyper-happy? Is there a tone that I should write with?

I don’t remember exactly what she said, but when I went back to my notes and I started working on my actual self-eval, the line I’d written was “shameless self-promotion, yo.” It was just a really funny phrase, and I put it at the top of my notes deck while I was crafting up my self-eval.

It was just such a good simple phrase. Like, this is your chance to talk yourself up. Talk about how much of a badass you are. I liked the phrase so much that it’s turned into a bit of a mantra.

That was a really hard thing to adjust to. Most researchers prefer that the research speaks. And so it can be hard for a researcher to promote themselves in any sense of the word. I’ve had to break that in the last couple of years and really try to think of marketing the insights, marketing my services as a researcher, and then marketing the research itself.

I’ve been blessed to have a really awesome leader who admitted to me, “guys, I don’t know what you do. I’m three steps away from your day-to-day. If you do cool things, feel free to drop me a line about it.” And so in the spirit of shameless self-promotion, when I do a big project or something like that, I make sure that I send a note to him like, “Hey, did you happen to notice I just finished this project, I think you would enjoy it.” That has actually yielded amazing results. He has actually taken things and forwarded them onto his boss who’s C-level. How many researchers’ insights end up in a C-level’s inbox?

Other ways to promote user research

First, I host weekly office hours. I do mornings one week and then an afternoon the next week so that I get my west coasters and my EU people, and make sure that everyone feels supported.

Secondly, when people show up for the first week at a new role, hopefully the first thing they’re asking is, what do we know? So as soon as I hear that a new team member has been added, I send out this onboarding email. It’s just saved as a template in my Outlook. It says, “Hi, I’m Ki. Here’s how you get access to research. I’m going to invite you to our office hours. I’m here if you need me.” I try to make sure that, from the get-go, they know who I am, they know where to find me if they need me.

You gotta put yourself out there. If you want people to recognize the work to make use of the findings, you may have to be a little bit more proactive in putting them in front of people.

And then the last thing, and the thing that I really enjoy doing, is once a week I try to send out a little tidbit, one of those little insight snacks, to the whole product org. There’s 65, 70 people on the product team now.

The tidbits don’t all get a whole lot of reaction, but for one of my favorite posts, a product manager was like, “wow, these buttons and texts, they look so much bigger than I thought they would.” My boss is like, “thank you for sharing that video, that does a great job of showing an issue I’ve been wanting to fix.” And my boss’s boss just sent a message that said, “thanks for being you.”

It’s so easy for researchers to sit in their corner and feel unappreciated. You gotta put yourself out there. If you want people to recognize the work to make use of the findings, you may have to be a little bit more proactive in putting them in front of people. If you hear something in a meeting and you did research on that last year, you should float that in as, “Here’s a research rerun for the week. Quick reminder, we touched on this topic last year.” We absolutely have that power. I don’t think researchers do it enough, and research just sits on the shelf and there’s a lot that we could be doing to keep that from happening.

Don’t democratize, put your research on rails

Q. In your new role, you’ve joined a new team that hasn’t had an experience researcher before. You’re an army of one, so I presume there’s more desire for research than you can fulfill. How are you thinking about scaling? How is this going to work for you?

A. I don’t think of it as democratization. I think of it as, “what research can I put on rails?” I have watched way too many designers try to write their own tasks. They don’t write tasks, they write steps: “Sign up for delivery. And then pick Tuesday. And then choose to have it delivered to the curbside…” They can’t help but write out the steps because they designed in steps. That’s how they work, that’s how they’ve been staring at this thing forever.

When I come in, I’m like, “okay, you want the user to schedule delivery to curbside on April 21st, and take away the old unit. Cool. That is one task. That’s not six tasks.”

Also, I found that they can’t help but get off track. They watch eight videos, and users say the darndest things and take them in all kinds of directions, and most of them have nothing to do with the experience that they’re testing. And I’m like, “you need to focus. That is not the point. Please stop thinking about that. Why is that in your report?”

The place where it is most problematic and where I see the most risk of a research project going wrong is test planning

So they have a harder time sticking to the script, and that’s okay. I have been doing this work for 10 years, I’m got more practice staying focused. So what I’ve kind of landed on is, no matter who you are, whether you’re a designer or researcher or anybody else, the place where it is most problematic and where I see the most risk of a research project going wrong is test planning. If you don’t nail the test plan — have really clear objectives upfront, have a concrete task set, have really clear questions — if you mess up any of that, the whole rest of the project loses value.

So I started sitting down with non-researchers very early in the process, before they write any tasks, and I’ve been like, “what decision do you need to make? What action do you need to take? What task are you trying to get people to do, and do you need any more data points? Do you need clarity, ease of use, or anything like that?” And I have them articulate all of that in a worksheet. Each objective has a number assigned to it and then we sit and write tasks and questions, and each one has to tie back to one of their objectives. If it doesn’t, we’re not doing it.

Ki Aguero’s test-planning and outcome-tracking spreadsheet

The other temptation I’ve seen over and over again is I’ll be like, “what if we ask time on task or what if we ask difficulty rating?” and they go, “you might as well ask.” And I’m like, no, don’t just add things to add things. If it’s not an objective that ties clearly to you making your decision, get it out. I do not need you to ask that question.

The scope creep gets so bad. I love it when test plans are like one task and two follow-up questions or one task, follow-up question and a second task and a follow-up question, the end. Those little tiny tests are super focused on the point and it’s less work for the designers to watch all the videos.

And the last thing we set up in the worksheet is instructions for each task. When you are watching task 1, write down what path they take and any questions that they have. Task 2, note whether they noticed the CTA. We’re very, very explicit about what they need to be watching and listening for. That way, when they actually do sit down and start watching the videos, they have a place to put it in the analysis table.

It’s an hour of our time upfront to sit down and to orient the designer to the worksheet. I’ve had multiple designers work through it and every time they’re like, “it keeps me so much more focused.” You get through research in a quarter of the time because you’re not slogging through all of these tasks and questions that you didn’t really need in the first place.

So that is my number one way of democratizing research, is putting it on rails like as much as I can.

And mostly what I have found successful in terms of scaling research efforts is unmoderated prototype testing. Like, you have an idea for how to solve the thing. Cool, let’s put it in front of people and see if it actually does that thing.

The importance of exposure hours

Other scaling efforts I have a harder time with.

I do think that I have internalized the idea of the exposure hours: you just need to actually put your heart next to a customer’s heart for two hours every six weeks. I’ve been holding off on implementing a customer listening program that would allow this kind of exposure to happen at scale, because I really need a second researcher to help me run the room and keep an eye on the team chatter.

But as soon as I have that second person to help me control the crowd, I can’t wait to start. Maybe it’s “Feedback Friday”: we’re going to talk to a customer and you guys have an opportunity for 45 minutes of exposure to a customer.

To me that’s the second way that you scale is you provide the opportunities for people to connect to customers. It’s not my job to package everything into containers, I just need to make sure that you actually connect to customers on a regular basis.

I’m still in the early days of figuring out how to scale that up and make that work for the vast majority of my people. But when I think about scaling up the efforts, that’s at the core, I just want to make sure you can connect to people and feel connected to our customers on a more regular basis.

If I do that, I’m not as worried about training people on how to do tree tests and stuff like that. I can still run a tree test for you, but I’m also just connecting you to your people more often.

Q. Your approach to scaling is slightly different from what I see at most companies. Generally companies will fall into one of two groups. Some of them train non-researchers very heavily and almost try to turn them into junior researchers. Others do much less training but put a huge number of guardrails around the non-researchers, such as approval flow and mandatory use of templates. You’re kind of in this middle ground where you’re both more supervisory and less supervisory than what I’ve seen other people do. How did you get there?

A. Training only lands when there’s a specific use case. In most cases, I find that designers are most interested in learning more about user testing when they have a project that needs user testing in front of them. Otherwise it’s too theoretical, it’s too removed from what they are thinking about and working on day-to-day.

That’s why I do the worksheet with them, because I can go, okay, here is the worksheet and here’s the way that you think about it and nope, we’re not splitting these out into different things. I am still teaching them. I’m teaching them in a practical moment when they actually have a design of their own on the line.

That is a very important thing. I can shove training at you. I can be blue in the face, but it’s all divorced from the practicalities of your job and it’s going to be six, eight, 12 weeks before you actually need any of that user testing knowledge. And by then most of it is melted out of your brain anyway. So why would I fill your head full of details about my job well before you are interested in learning about it? It doesn’t make any sense to me to teach people that way.

I just would so much rather teach somebody when their appetite to learn is actually present.

So that’s the moment when I teach.

Q. Often when I talk to research teams, they talk about generative versus evaluative research, with the idea that we are going to democratize the evaluative stuff so that we can focus on the generative stuff for you. Is there something real about that or is that not the right way to look at it?

A. I think yes, it’s easier to put research on rails that’s evaluative. You know — a prototype test, there are more binaries. Either they made it or they didn’t. Either they got stuck or they didn’t. Either they had questions or they got through it just fine. That kind of stuff, I feel more comfortable being like, “yeah, you got this.”

When it comes to more generative stuff, it’s very important that they hear it and hear the person behind it, but the researcher probably still needs to generate the overall themes. I would not leave the non-researchers in the group to generate themes unless they all did it together as a group. Like you could facilitate that kind of thing.

I have seen researchers do that and do it successfully. There’s something very powerful about, “okay guys, we’re going to watch five videos today and then we’re going to do affinity diagrams in the room together about the things we just watched.” And I would be open to doing something like that.

But otherwise, again, I go back to exposure. Just remember there’s human beings that you’re designing for and I know you don’t think about them a lot, but I’m going to make you think about them for 45 minutes and then that’s good enough for me.

Q. I want to test my understanding. I’m getting this vision of scaling research where it’s not about totally controlling it and doing it all yourself, and it’s also not about just throwing it over to them and having them do it, but it’s more like a facilitated approach where you are focusing on the part that you can really add value to, and you’re empowering them to run with the parts that are safe for them to run with, but you are still involved in supervising.

A. Yep. It’s not democratization, it’s research on rails. I just put some discipline around it and then I try to let you roll. I haven’t really let people play with information architecture and definitely not surveys. Those things are really not something that a non researcher should touch or try to make.

I don’t ever do a full scale survey anyway. I ask two questions and put them on a slide and talk about the verbatims as well and it’s usually like one graph of findings and then a recommendation that I make.

It’s not heavy exhaustive surveys, it’s something I can squeeze in on the side. And the same is true for most information architecture testing. It’s not hard, but I still don’t want to make other people do it and have them make a silly mistake.

I did see some agency work on tree testing, and they were throwing around jargon terms like the VHP. And I was like, “oh God, users won’t know what the hell VHP is.” That’s the thing that I worry about on the tail end. If you make a bone-headed mistake like that, you’re using that data and making decisions and you should not draw conclusions from that, but you didn’t notice in the writing process.

So yeah, there are still some methods that I’m just like, nope, I’m going to go ahead and own that. And it’s not that it’s super complicated, it’s just I know that it can go wrong and I don’t do a ton of training.

This is the second part of Ki’s interview. The first part focused on how to teach empathy to a company. If you want to learn more from Ki, check out her articles on Medium, and her blog posts about her new role.

Photo by Ramazan Karaoglanoglu on Pexels

The opinions expressed in this publication are those of the authors. They do not necessarily reflect the opinions or views of UserTesting or its affiliates.