Table of contents

Summary

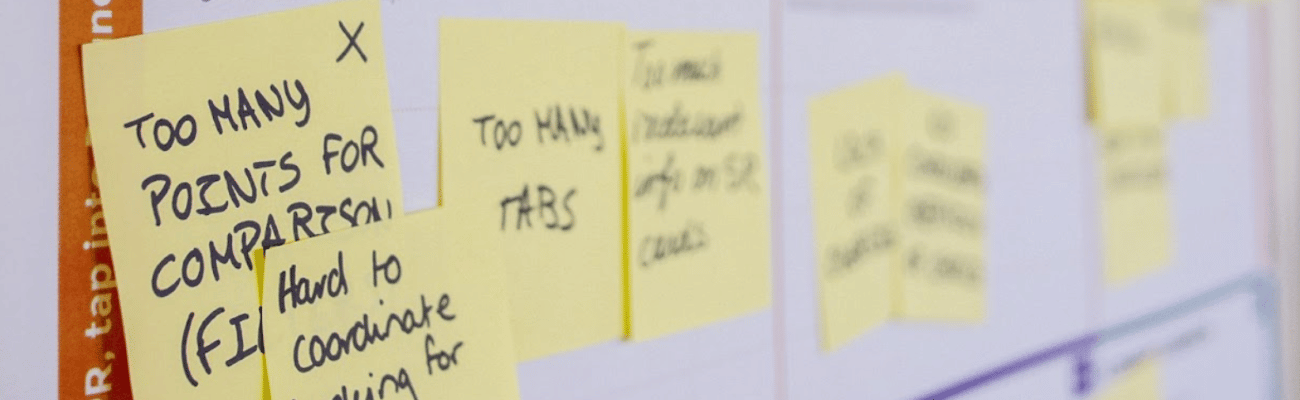

We work with many companies that want to scale (or democratize) experience testing and insights to non-researchers. Across those companies, we’re seeing consistent patterns in what works, and what problems to watch out for. We’re building these lessons into our playbooks for companies that want to scale.

Here’s a preview of what we’re learning so far. If you get these five tasks right, your scaling transformation will be far more likely to succeed:

- Get strong executive sponsorship

- Build insight-gathering into the formal goals of the people who will be testing

- Document best practices, and create reusable templates and screeners for common tests

- Provide detailed, ongoing training

- Start with a pilot deployment to a small number of employees, and then expand

Here are more details on what to do, written by Kuldeep Kelkar, SVP of UX research services. A version of this article was first published in his LinkedIn feed.

At most enterprise organizations, the demand for experience research outweighs the supply of professional researchers. It’s common to see only one researcher for ten or more designers or product managers, making it impossible for researchers to support every product development effort. This insight gap is driving many companies to empower non-researchers to run their own experience tests, a process that is often called either scaling or democratization.

In our work with large enterprise companies that want to scale insights, we have found the five best practices below to be the key to success. We have also worked with a handful of customers where democratization fizzled out within months, and it was often because one or more of the best practices were not implemented.

Nielsen Norman Group (NN/g) published a good guide on scaling, titled Democratizing User Research. It speaks mostly to the actual research process, but isn’t focused on organizational change. We’d like to add our organizational best practices for scaling successfully.

1. Get an executive sponsor!

Organizations that have successfully scaled insights to designers and product managers over a long duration of time have one thing in common: someone higher up in the organization values it, sells it, and even mandates it. This could be chief product officer, chief design officer, SVP or VP of design, chief customer officer, or chief marketing officer.

All designers and product managers are under time pressure. Most of them want to be customer-centric and know that collecting end user feedback is important and necessary. But if it’s not part of company culture and if key executives don’t highlight it, or preferably mandate it, it will often be neglected in the rush to get things done. Successful scaling needs to be strongly supported by a key executive in the chain of command of the people doing the designers and PMs doing the tests.

Example of executive support:

- The key executive declares the intent to be customer-centric at an all-hands meeting. In the meeting, you illustrate how teams can use experience research to get continuous feedback, and how the organization will measure the effort’s success.

- The key executive gives a quick five-minute introduction and thanks everyone who is part of experience research training.

- The key executive recognizes publicly the individuals and/or teams who successfully implement continuous product discovery and deploy continuous improvement through experience research.

- The key executive evangelizes the benefits by sharing internal scaling success stories and study results with the full organization.

2. Build experience testing into goals / OKRs / MBOs

In line with the first recommendation, if there is executive support for scaling research then successful organizations should make it part of designers’ and product managers’ OKRs (or MBOs or annual goals, whatever term you use). Most organizations say they want to be customer-centric, but unless it’s part of the annual review process, it does not happen, because something else does get measured will take priority. But if experience insight-gathering is part of designers’ and product managers’ annual goals, it’s very likely that it will happen.

Examples of effort metrics you should build into individual and team goals:

- X number of studies, per month, per initiatives.

- Y% of designers and product managers run their own studies each quarter.

- Z% of products have at least one round of user feedback every 6 weeks.

- Increase in number of larger strategic research programs that professional researchers can run because they have handed off evaluative testing.

- Employees have a “customer exposure” goal (for example, they are required to spend at least one hour per month in customer interviews).

3. Create best practices, templates, and screeners

Having a research operations function can often be the difference between successful long term scaling vs. initiatives that fizzle out within weeks. Simply asking designers and product managers to get user feedback is not enough; they must be supported and helped along the way.

If running a simple usability test takes heroic effort by a designer, they will go back to what they know best – making design decisions based on best guesses, with no user feedback. The designer needs:

- Access to a research platform (like UserTesting, UserZoom, or others).

- A library of screeners for the company’s target markets

- Research study templates for common needs (with documentation)

- An easy way to find the right participants and handle (or not have to handle) participant incentives.

When all this is in place, the friction of running tests is drastically reduced, and the chance of getting user feedback across the product development lifecycle dramatically increases.

Examples of what to create:

- Approved library of screeners for the company’s most common personas.

- Approved set of study templates, and a questions library, that most designers and product managers would need, along with instructions on how to use them.

- Documentation that outlines type of research methods (usability tests, click tests) that can be used to answer frequent questions.

- Well-documented guidelines on the types of studies that designers and product managers should not try on their own, and procedures for reaching out to professional user researchers in those cases.

4. Provide multi-session training and ongoing support

Most designers and product managers want to be customer-centric. That does not mean they all have the training to execute rapid research. They generally need some basic research training.

The goal is not to train designers or product managers to be full time professional researchers, but to arm them with enough skills and knowledge to differentiate good research from poorly conducted research. These skills include:

- How to select the right screeners from the library, and edit them safely.

- How to select tasks and unbiased scenarios, and safely modify them.

- How to interpret and extrapolate insights.

- Techniques to influence stakeholders with insights.

- An understanding of the true boundaries of small sample size research, so as not to poorly interpret research insights.

- A basic understanding of how to use an experience research platform.

A single training session is usually not sufficient to teach these skills. Like any other learning experience, people need repetition and practice before the lessons will sink in. Most designers and product managers are trying to get quick feedback on tactical decisions they’re making. The quality of that feedback will dramatically improve if designers and product managers are trained in a series of classes over multiple weeks, and if they have easy access to a trained researcher who can review their studies and/or prototypes before launching a study. Ongoing support is also needed in interpreting and understanding feedback to identify actionable insights.

Examples of what to do:

- Multi-session research skills training for non-researchers. Your research platform provider should be able to provide this. If they can’t, you need a different partner.

- Set up office hours when non-researchers can seek advice from researchers

- Offer 1:1 consulting sessions with researchers

Useful resources:

- Five UX tests every designer should be running in Agile sprints (link)

- Democratizing UX: Who to train (and what to train them) (link)

- Human insight implementation guide for research teams (link)

5. Pilot, demonstrate value, then expand

Enterprise organizations usually approach user research democratization in one of two ways. The first approach is to mandate that all designers and product managers across the organization start conducting research at the same time. The second approach is to first pilot it with a group (or a business unit or a team), learn from it, and then roll it out to one or more teams at a time.

Either approach could work in theory, but after five years of offering services to dozens of organizations I have come to the conclusion that piloting it first with smaller teams works best. The intent is not to slow-roll it, but to use a test and learn approach.

Scaling is a change to how the organization works and to its culture. A lot of cultural change at once, across the org, can often fail. Piloting it with smaller teams first can be a great way to demonstrate quick value and seed the change in culture you need to make. Successful pilots become a magnet that drives other teams to want it sooner rather than later.

Examples of what to do:

- Run a six-week pilot with a business unit / team of six designers and four product managers.

- Run a second pilot of six weeks with two additional teams before expanding to more teams.

Useful resource:

- Eight Valuable tips for building successful democratization (link)

What do you think?

These are my organizational best practices to scale insights over long sustained periods, to drive a movement for end user empathy within an organization.

If you have comments on these tips, or have other best practices for scaling experience research, please add your comments and suggestions below.

Photo by Christina Winter on Unsplash

The opinions expressed in this publication are those of the authors. They do not necessarily reflect the opinions or views of UserTesting or its affiliates.