Contents

- Step 1: Get design leadership buy-in and define success criteria

- Step 2: Identify who you’d like to empower and create a rollout plan

- Step 3: Define what you’d like to empower them on

- Step 4: Build assets and resources for them so they can test efficiently and with rigor

- Step 5: Train them

- Step 6: Provide ongoing support

- Step 7: Build a community to facilitate shared understanding

- Scaling without the pain

In my role, I talk to UX research leaders and teams multiple times a week. One of the most common issues they raise with me is that they are overloaded and they say things like:

- “We’re overwhelmed with research requests. We can’t get to it all.”

- “We’re doing so much evaluative research that we can’t focus on more strategic work. We want to be doing more generative and discovery-type research.”

- “Experiences and products are going live without any user testing or customer feedback.”

Unless you can get a lot more UX research headcount budget, the only solution is to empower more people, especially designers, to gather their own customer feedback and apply it to their work. Designers are a natural fit to take on some of the research work as they are often cross-trained in both research and design, and they likely understand the value of the iterative design process. When I suggest this to these overloaded, overwhelmed UX researchers, they express several fears:

- “But they’re going to do ‘bad’ research”

- “We don’t trust designers to do research correctly.”

- “They’re going to cherry pick their favorite feedback and circulate it broadly, which will lead to poor decisions.”

As a researcher, I understand the concerns, but I strongly believe there is a way to empower designers to do good research. And it doesn’t have to be hard, complicated, or time consuming if you follow this seven-step process:

Step 1: Get design leadership buy-in and define success criteria

It doesn’t matter if you empower your entire design team with the best methods, tools, and tech if you don’t have design leadership buy-in. And what do I mean by buy-in?

First, it means that the design leader is willing to allocate staff time to building human insight into their design process. This means that they will need to carve out some of their time to focus on collecting, analyzing, and applying user research findings. It’s typically not a ton of time, but it’s still a shift in workflow that the leader needs to vouch for. To persuade these design leaders to allocate some of their team’s time to gathering human insight on their work, focus on the benefits: creating great experiences that deliver results, growing designers’ skills and career, and building deep empathy with customers so the design team can continue to make smarter, better decisions.

Second, design leadership buy-in means holding the team accountable to actually do the work. The designers are very busy, and chances are they are used to having someone else do their research for them. If there isn’t a firm requirement and designers get to choose whether or not they want to test their work, most of the time it won’t happen. The design leader must be willing to introduce testing requirements and stage gates into the design process and then hold the team accountable. (For example, no prototype gets approved unless it has been tested with users.)

In addition to buy-in, it’s important to align with the design leader on success criteria. The most powerful success metrics demonstrate how design improvements, informed by customer feedback, impact important business metrics like product adoption, conversion rates, revenue, and even cost savings, but success can also be defined by the number of designers who engage with the program and how often or regularly they run user tests to inform their work. Once they are defined, you should revisit them on a monthly or quarterly basis to see how you are tracking against them and adjust course as needed.

Step 2: Identify who you’d like to empower and create a rollout plan

When we think about empowering designers, there are some important choices to make.

First, choose the specific design roles you’d like to focus on. Are you interested in empowering all designers or designers at specific levels, such as junior designers or senior designers? Hint: There is no right answer here, and it depends on how big the design team is, the scope of their work, and if there are different levels of designers.

For example, if you are a team of one and you’re supporting ten designers who focus on building prototypes, it likely makes sense to empower them all. However, if you are a team of ten and are supporting hundreds of designers across a portfolio of products, you may want to focus on lead designers as they are likely key influencers on the design side getting support from more junior designers.

Hint: There’s no right answer, but be sure to address this in your plan.

Second, decide if you want to start by empowering everyone all at once or if you’d like to take a rolling approach. If you decide to empower everyone at once, you will find natural efficiencies in training and it will likely be easier to roll out stage gates and mandates at that time. For example, a healthcare company onboarded all designers at once and then built it into new hire onboarding for designers.

If you take more of a rolling approach, this allows you to learn with each cohort and take a more iterative approach to the program (hey! Drinking our own champagne!). It also allows you to build up a set of champions or advocates that will vouch for the program and build demand as you bring on more people. For example, a large telecom company decided to empower all designers but they had a rolling approach to empowering them. The first onboarded ten designers, learned and iterated on their approach, and then continued to bring on designers in cohorts of ten until all designers were empowered.

Hint: Again, there is no right answer here, but you should define it in your plan.

Step 3: Define what you’d like to empower them on

The worst thing you can do when trying to empower designs is to give them a big, wide open slate to do whatever type of research they want to do whenever they want to do it. They’ll quickly become overwhelmed with options, which often just leads to inaction or disengagement.

Narrow the use cases and focus on how you can help them create better designs and experiences. Find 1-2 places within their current workflow or process where they could benefit from some quick customer feedback.

For example, if you follow the double diamond design process, two great places to integrate customer feedback are when you are vetting a whole bunch of ideas before you commit to a design and again when you are further down the design process with a low- or high-fidelity prototype.

In other scenarios, your designers may be on an innovation team that is deeply exploring customer problems and deciding on new problems to prioritize and solve for. In this case, you likely want to empower them by conducting customer interviews and validating problem statements.

A good human insight vendor will help you identify the best places to integrate human insight into the design process at your organization.

Step 4: Build assets and resources for them so they can test efficiently and with rigor

Once you’ve selected the handful of use cases you want to empower designers on, build reusable assets and resources to make them incredibly efficient. There’s no need for designers to reinvent the wheel every time they want to get feedback on an idea, concept, or prototype.

Here are some reusable assets and resources provided by UserTesting:

- Test plans, templates, and scripts to help them ask the right questions

- An on-demand group of prospects or customers to get feedback from

- Tools, including analysis and reporting templates, to help designers go from a bunch of data to clear direction and action. For example, this template expedites comparing the experiences of two competitive offerings.

Pro tip: If you create standard reporting and output templates, this allows the UX research team to quickly assess the learnings that the designers are collecting over time. This will position your team to do things like quarterly readouts of valuable insights that have informed important decisions, and activities like these will help further position your team as strategic business partners.

A good human insight partner can help you build these assets.

Step 5: Train them

Once you’ve created the resources and materials for designers to run efficient, rigorous research, you need to train them on how to do it.

Refrain from trying to turn your designers into trained researchers. Instead, focus the training on the handful of use cases you want to empower them on and build your training around that. For example, instead of explaining research methodology in depth, focus on the templates and materials you want them to use to do the work and how to best use them.

Training should include a live option as well as pre-recorded on-demand options.

A good human insight vendor will be able to partner with you as you train the team. You should not have to create the content and do the training yourself, but you can if you want to.

Step 6: Provide ongoing support

While building some foundational training for your designers is critical, you also have to provide ongoing support. This can be in the form of office hours, a Slack channel, or even reviewing test plans or reports before they go live or are broadly circulated.

A good human insight solution will include an “approval flow” feature that prevents a test from being launched until it has been reviewed by an experienced researcher. Consider turning on this feature for designers when they begin to test.

Step 7: Build a community to facilitate shared understanding

Once designers start collecting customer feedback and applying it to their work, they’ll start learning quite a bit and they will likely want to share key findings with a broader community.

Provide them with an opportunity to connect with other designers who are doing similar work. This could be monthly lunch and learns, a place within a tool like Confluence to share insight, or even stack ranking designers by engagement so they can have some bragging rights.

Scaling without the pain

Following this seven-step process to empower designers to run their own user tests is a simple, straightforward, and contained approach that allows UX researchers to offload some work while maintaining research integrity. Also, it will free up the UX research team’s time to focus on generative and exploratory research. Of course, it won’t be perfect and without hiccups along the way, but this approach is much better than either of the alternatives: continuing to suffer from overload or just throwing testing software at the designers and expecting them to figure it out on their own.

The diagrams by Dan Nessler, which appeared on Open Practice Library, are licensed under a Creative Commons Attribution 4.0 International license.

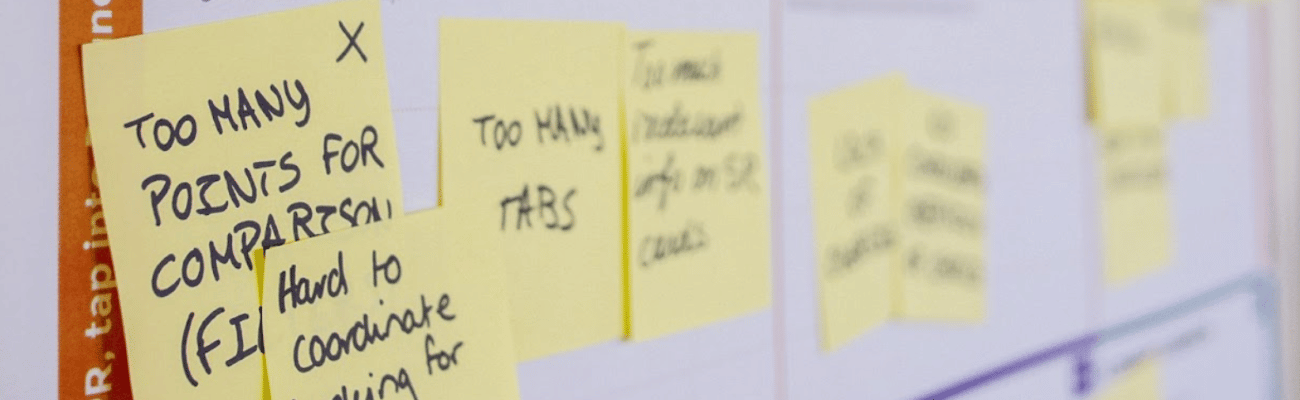

Photo by Daria Nepriakhina on Unsplash

The opinions expressed in this publication are those of the authors. They do not necessarily reflect the opinions or views of UserTesting or its affiliates.

Contents

- Step 1: Get design leadership buy-in and define success criteria

- Step 2: Identify who you’d like to empower and create a rollout plan

- Step 3: Define what you’d like to empower them on

- Step 4: Build assets and resources for them so they can test efficiently and with rigor

- Step 5: Train them

- Step 6: Provide ongoing support

- Step 7: Build a community to facilitate shared understanding

- Scaling without the pain