Table of contents

- Move away from “check box” research

- The importance of usability metrics

- Measurable usability goals drive adoption

- When and how to constrain the non-researchers

- How to help them: templates for tests, templates for results

- Training vs. constraining non-researchers

- Company culture and support from management accelerate adoption

- Don’t measure the success of research, measure the attainment of usability-linked business goals

Summary

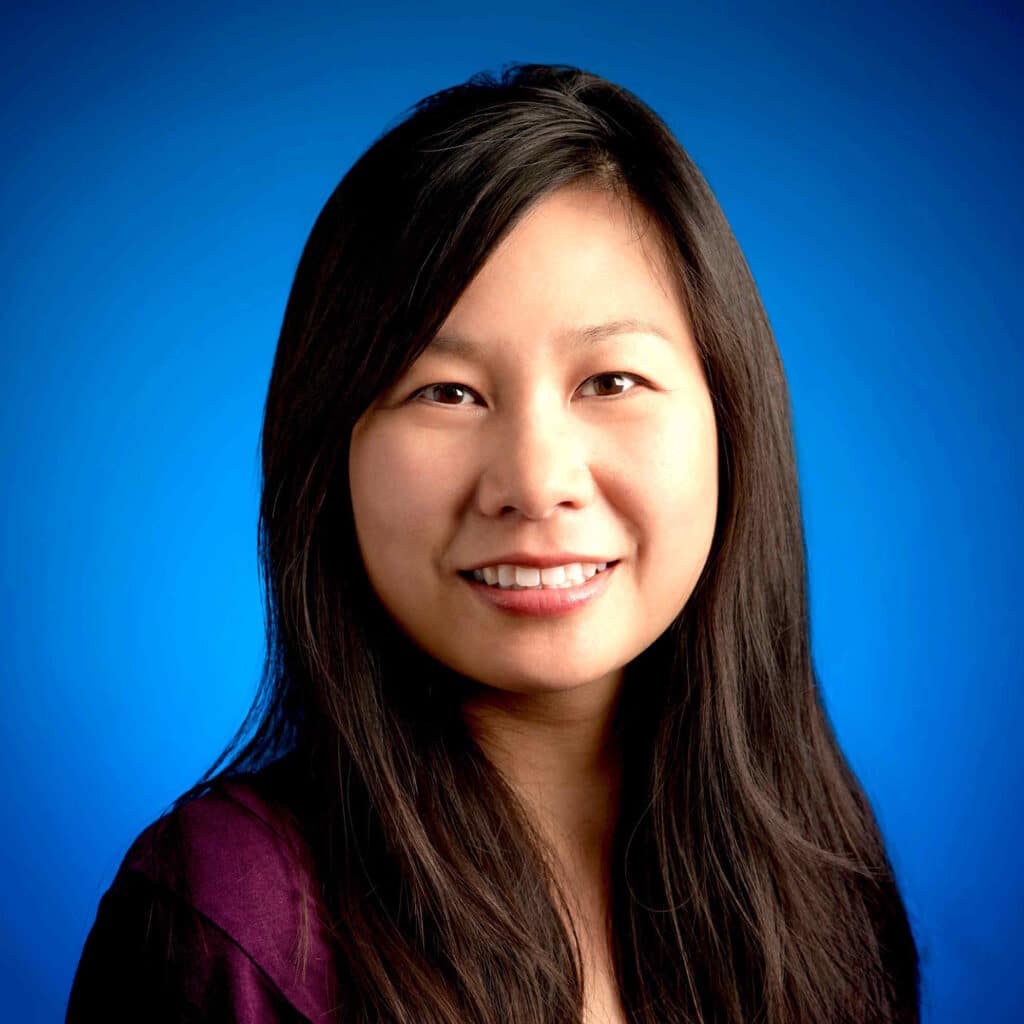

Ann Hsieh drives one of the most successful insight scaling programs we’ve seen. A 20-year UX research leader from some of the world’s most prominent companies, she’s spent the last two and a half years building out the UX research function for a business unit at a very large tech company, scaling insights to a large number of designers and others, and still growing. We visited with Ann to understand the sources of her success and what she’s learned along the way. The topics we covered include:

- To drive human insight adoption, tie your experience goals to the company’s business goals, and create measurable usability metrics that you can track against.

- Lightweight training can work if you surround people with instructions, templates, and hand-holding.

- Company culture and senior management support is critical.

- The wave of layoffs and cost-cutting in many companies makes it even more important to scale insights to non-researchers.

Q. You’ve done UX research at a lot of places. Have you done this sort of scaling before at other companies?

A. I’ve done it at other companies. I’ve been in user experience research for about 20 years: started my career at Yahoo for three years, and then Nokia for a year and a half, Google for about seven years, Facebook for about two and a half years, and then Walmart e-Commerce for about a year and a half before I joined my current employer about two and a half years ago.

Scaling research I did more on a leadership level, probably starting more at Walmart because I was head of research there. I managed about 20 researchers, and we supported 200 designers and I don’t even know how many product managers. So we definitely had to have a scaling model.

We have fewer researchers in my current company, so we need to scale a lot more. I would say at Facebook and Google and Yahoo, there was more kind of an embedded researcher model.

But I would say here, and a bit at Walmart, I was embracing designers and product managers doing some of the more tactical research.

Q. When you arrived in your current role, what was the team like? Did you start the research function in your organization from scratch? And how did the process go from that to where you are today?

A. We were a small team with big goals, so we did things like have expert training on doing research methods and being unbiased. We also made playbooks and templates on research plans, and moderator guides for different methods. And then also we created an interview series where we could help with recruiting, but then a PM or a designer could run the interviews. We figured out a lot of different mechanisms.

Now I have more people on my team, but we’re still embracing similar mechanisms of using playbooks, using templates, figuring out what things make sense for the researcher to do versus a product manager or designer. We researchers still review or give approval before a UserTesting study launch, for example.

Some of the designers are very new to research and user testing. So it still needs us to kind of review all the work to make sure everything is consistent and high quality.

Move away from “check box” research

Q. When you came into the company, did the designers and PMs want to be empowered to do research? Or did you have to convince them that they needed to do it as opposed to just guessing?

A. I definitely did a lot of education in the beginning. When I joined. I think research was much more reactive. There was a lot of, “We want a usability or validation study on this.”

When I join a new team or org or company. I always do interviews myself of all the stakeholders and ask them, what’s your experience been with research? What are your needs? What do you think could be improved?

So I did that when I first joined, kind of soaking up knowledge and also interviewing stakeholders on their perception of research. And it was pretty apparent there wasn’t formalization. For example, people were saying, “Oh, I wish there was a repository, because I can’t find old research.” And also there had been a lot of reorgs. So people weren’t exactly sure who to ask.

So one of the first things I did was build a wiki, which helped to do the scaling. I wanted to make sure that it was a taggable repository. I also wanted to make sure there was a playbook, which I had used in a previous role. And I wanted to make sure we had prioritization principles, a mission and kind of tenets on what kind of research our team did. And then I just did a lot of education.

Some stakeholders were very used to, “Come help us right away. We’re launching something in two weeks, and we need you to do a validation.” That always hurts me; you just want us to be a check box and say good things about the thing you’re going to launch.

That always hurts me; you just want us to be a check box and say good things about the thing you’re going to launch.

So I had to move them away from that mentality and teach a little bit about, “I don’t think that’s the best use of research; we already have very, very few researchers as it is. So we’re going to do more strategic research that could be across multiple teams, and in terms of the tactical launch readiness type of studies, happy to help your team run those on your own.”

I think we’ve matured a lot. Now we have a usability framework that focuses on the tenets of usability. And we’re looking at task success and time on task. So we’ve built these robust metrics.

The importance of usability metrics

There’s a lot of metrics that have been adopted since the eighties. A lot of companies measure behavioral metrics like simple analytics. When I was at certain companies, one thing that we usually measured was page views and then task time, looking at efficiency. You can quickly see a funnel, especially if you look at people who go into a search engine and search for something, and then they drop off when you have to do a login or anything like that. So those are typical metrics that you would look at.

But we can also measure usability, depending on the complexity of a task. You give someone what we would call a core task to do something. On a search engine, it’d be find a crib and figure out how to purchase it. People do a search, look at different images, go in the shopping, look at the price… You can figure out the time to do that, and see any barriers to that. And if there’s any findability issues or people weren’t successful doing that. Satisfaction is more self-reported, like “how satisfied are you?”

And then engagement was more recently added in 2000; that was something that social media companies always goaled on. You’ll hear social media companies talk about daily active users and whether they are increasing or decreasing. That one was really interesting because people hadn’t thought about it in the past.

That’s been an interesting game changer in terms of what kind of metrics we actually want to drive. Is it time on task? Are there different types of engagement?

Some examples are the number of downloads, or number of shares, or likes. You can measure some of that in a usability study. You can see task success rate, which is effectiveness. You can see time on task, which is efficiency. You can ask about satisfaction and perceived engagement. And then, on the behavioral side, you can track that. So then you can see the predictiveness: this is how we currently are, and this is how new design iterations could measure against it.

That’s been a lot better. When I started out with this team there was a lot of “do you like this?” type of questions that I would never have asked in a usability study. And so I’ve been teaching designers and PMs how to objectively give tasks during the usability study, figure out how to measure task success, how to do that with a before and after, and how to do what I call design experiments, and make sure that we are actually improving. Moving away, especially, from that validation model to more evaluative. And then also having the researchers be viewed as strategic partners.

Q. You’ve talked about how you began the journey. Now talk to me about where you are today — what sort of work are you doing, how many employees do you have directly on research versus how many people are you enabling, volume of work? Help me understand how the flow’s working right now.

A. I can’t give you exact numbers, but my team has grown a little and the number of people we scale to has grown a lot. I would say we’re still setting up more things and figuring out more ways to scale.

Measurable usability goals drive adoption

Q. Do you have any sense of how ubiquitous the adoption is? You’ve got a bunch of designers, you’ve got a bunch of product managers, do you feel like they are all now taking up the ball of doing evaluative research? Or is it like, some of them are doing a lot, some of them still aren’t doing it at all?

A. I would say it’s pretty high. We took an important goal to improve our experiences, and our teams are working on that.

Q. So that’s a lot of scaled research your team is supporting. For your team, your direct employees, how much of your time are you running your own generative research versus how much are you supervising and empowering others? What’s the mix for you?

A. Yeah, we’re still figuring that out. <laugh>

It can be difficult. Ideally it’d be like 80-20. But I do think sometimes, because there’s just so many more designers, that we end up doing more support, which I feel mixed about. The good thing is we’re doing this goal, and we’ve been telling success stories about it. And so I do want to make sure my researchers get credit in terms of helping the designers to improve designs. At the same time, I also want them to have time to do the strategic work.

So yeah, it’s definitely a balance and we’re still figuring it out. But I guess the good thing is that our work is getting showcased more and more and I think that’s really important.

Q. Do you have an operations function? Or do your folks do their own ops management?

A. We’re still setting some things in place for that. We want to help each other recruit, but we wouldn’t want one of our people to spend a hundred percent of their time just recruiting for everyone across the company. That’s why we’ve built up recruiting screeners on UserTesting.

When and how to constrain the non-researchers

Q. Let’s talk about these non researchers that you’re empowering to do stuff. Are there particular methodologies that you will or will not let them use? What barriers have you put around them or, or have you not tried to?

A. Recently we have started to require that they get approval from us in order to launch a UT test. We want to make sure that the research is consistent. We have quite a lot of new designers, so they’re new to research.

We do have some non-researchers or designers doing some things that are a little bit more complex like card sorting or even surveys. So we’ll have office hours where we’ll help people on that. We have a survey guidance page on our wiki.

We would also encourage people to do interviews. Covid stopped us from doing field research altogether.

I haven’t had any designers do anything super complex, like a diary study. Not that I’d be completely opposed to it, I just think they’d need a lot of guidance and help on that, especially the analysis part.

And then there’s participatory design; we may actually do some coming up soon. I would want to set up some guidelines on that before we let lots of non-researchers do that.

Q. How do they know when they should ask you for help? It sounds like you’ve got approval flow turned on, so is it that they have to come to you at some point in order to get it done?

A. Yeah, basically they have to.

How to help them: templates for tests, templates for results

Q. What sort of training do you do for them? Do you have a bunch of formal classes that you do? Do you run those, or do other people run those?

A. I have training that I started at another company. It’s about a 30- to 45-minute class. It’s basically how you start from different questions. Usually a product manager will be like, “How many people are going to use this?” Or a designer might be like, “Which version do you like?” And then how do we translate that as a researcher into a research question?

And then how might we broaden that, and how we choose different methods, and then how do we think about how to ask those questions without being leading.

And then also even observational things that you can still do in a remote setting, like just seeing people pause, like how to not be biased and kind of match their behavior.

And then we had a quiz too.

And then we have those templates. I think the templates help a lot. For example, a research plan is something that I think people who are really new especially like.

At every company I’ve been at, everyone’s like, “Oh, I love surveys because it’s a high number and that will just tell me what to do.” But then they design the survey as a very leading type of thing that won’t really answer the question. And so I’ve made a research plan for a survey, which people use as a template, and they are like, “Oh, I didn’t know I need to think about all these different things. Like, what kind of decision are you trying to make? How would you analyze this? What’s the sample size you need?” And then also if you get a data point of like 50% this versus 70% that, does that actually help you make a decision or not?

You know, as researchers we know that’s how to decide which method to use. But I think for stakeholders, they kind of jump to method and they don’t realize you have to step back and think about that beforehand.

Q. So, they get the study worked up, they’ve had some training, they get approval from you to run it. Do you stay involved when they get around to interpreting the results? Or are they kind of on their own for that?

A. It really varies. I also have an analysis template that shows how to put together themes and patterns that you’re seeing. I also have an analysis spreadsheet where we actually download the Excel from UserTesting, and then we manually add a row in. For example, we have participants say whether they perceive they were successful. And then we go in and manually watch the end of the videos to see if they actually were successful. So we add that and then we calculate the time on task and perception questions and then we have final scores, and we are able to come back and compare results to previous versions.

It’s a little bit complicated for a new designer, so we have been helping on that. Depending on our bandwidth, we can either help on the entire analysis or we can show them one participant and have them do the rest or something like that. It really depends on our availability and how high priority it is.

Training vs. constraining non-researchers

Q. I’ve been talking to folks at other companies who’ve tried to scale, and I think I am seeing two different approaches. I’m seeing places where they focus very heavily on doing intense training. For instance, if you are a non-researcher like a designer, you’re going to go through a six-session course. At the end of this, you get certified, and if you are not doing the homework properly, you will be kicked out. If you’re not engaging properly during the classes, you’ll be kicked out. It’s a really formal controlled training program. But then at the end of it, when you are certified, they kind of let you go. You can do whatever you want, go run your studies, et cetera.

I think what I’m hearing from you is a model where it’s less controlling upfront in terms of the volume of formal training, but then there’s all sorts of boundaries you’ve put on them in terms of they have to get approval for the test before they can launch. It also sounds like you’ve got an enormous amount of documentation in terms of templates: Here’s how to analyze them, here’s how to use the template, here’s when to use it. So it’s kind of like, rather than training them heavily, you’re constraining them and supporting them through all the stuff that you’ve done around it. Am I reading that right?

A. Yeah. I would also say some of this is newer, like we didn’t do this approval thing until the last few months, before then it was a little bit more wild, wild west, which wasn’t great. I mean, we did have templates, but we didn’t have the bandwidth to approve 40 or 50 people’s work. It just would’ve been prohibitive in terms of our time. But it did hurt our hearts a little bit.

And so, because this is a goal, my researchers asked me to do this, let’s just put everyone on review. We also had an influx of brand new designers, as I mentioned, that hadn’t really done research before. And also the design managers brought it to my attention. They were like, we see them asking, “do you like this or not?” And so they actually wanted that too, which was great.

Even with this design team we work with, there’s still a lot of other sister teams that we also share UserTesting with that we don’t necessarily see everything that they do. We have office hours once a week where we do give advice and give templates and still as much as possible review. We call it governance. We try to do as much as we can, but we can’t necessarily govern everything as a very small team.

We don’t do the whole intense training thing, because I think that would make sense more for a very small company. Honestly, reflecting on layoffs happening recently with researchers, I don’t want people to think you can become a researcher in six weeks and then you don’t really need us.

There have been a lot of different debates. Some people are like, “It’s not really research, we should call it empathy building” <laugh>. There are different schools of thought on that.

I try to tell my team, “I know we can see a lot of issues everywhere, but we can’t control 500 people.” We have to pick our battles and figure out where we can have the highest impact.

I think the second approach makes sense in terms of getting people to feel like they can get customer feedback and be customer obsessed, but at the same time ensuring there’s a level of quality or consistency as much as possible. But also not letting perfect be the enemy of good. That’s why I try to tell my team, “I know we can see a lot of issues everywhere, but we can’t control 500 people.” We have to pick our battles and figure out where we can have the highest impact.

Company culture and support from management accelerate adoption

Q. Let’s talk about adoption. Some companies have this idea that they’ll empower all the designers and product managers, maybe do one training session, and then everyone will use it automatically. Then they look a few months later and out of a hundred people trained, seven of them are using the platform and the rest of them have gone back to their old business processes. Did you have a problem with adoption at first?

A. We are fortunate. I feel like it’s actually been the opposite. People here really want to use UserTesting, but we have been limiting that to designers. I think a lot of product managers would want to use it, but we just don’t have the licenses. We tell them you have to get funding in order to do it.

I think the good thing is, customer obsession is a leadership principle for our company. And that can manifest in both data as well as what we call anecdotes or qualitative research.

And so every document that product managers or even designers write, like a product document, they want to include quotes from users. It’s pretty highly encouraged. I would say adoption is pretty high.

Customer obsession is a leadership principle for our company….so every document that product managers or even designers write, they want to include quotes from users.

We also just get a lot of requests for us to do research for them. And we often tell them we cannot because again, we can’t run a hundred studies a year. Sometimes people think we’re a service org and they can submit something to us and I have to prioritize and say, we can’t work on projects outside our roadmap.

So I would say it’s the opposite. There’s a lot more demand than what we can connect with.

Q. How do you measure the success of this whole thing? Are you tracking the number of studies that they’re running? Or is it just if they feel satisfied that they’ve got the right info, you’re satisfied?

A. I think that’s always challenging as a researcher. In one doc that I wrote recently, we did write, “We did X number of studies with this many recommendations.” We’ve done different things with the recommendations. We’ve put them into tickets, and that one’s tougher. I think people don’t like to be assigned a ticket. Also, some of our recommendations are longer term, so it’s not like they’re going to be resolved within a month. It might take a year or longer.

We share our research insights in business reviews and work with product managers on initiatives to address the top pain points. I think that’s a good thing because the reaction to our research is not just, “Oh, that’s kind of interesting to know.” It actually will drive an initiative that a team is going to do, or change an initiative, or if the team doesn’t agree to it, then maybe it’s a hotly debated topic that will need escalation from leaders. And these are all good steps to getting impact.

Don’t measure the success of research, measure the attainment of usability-linked business goals

Q. Theoretically, doing a lot of this research should be resulting in lower amounts of rework, fewer sprints that you have to go back and redo, faster time to market with successful products. Have you ever been able to trace it back to those sorts of things? Or is there so much distance between the research and the outcomes that you can’t track it?

A. I will say having a usability framework has been useful for that. It varies depending on the stakeholders and their willingness to embrace our recommendations. If their baseline is really low, sometimes they’re upset about it and then we talk about it. It’s a way to measure the current status and then, if we do new iterations, this is how it has improved. It’s a low-cost way to do that.

It’s only been a few months, but we already have had two good success stories on it and we can actually say things like, it’s improved by X%. And leadership has been really happy to hear that.

Q. Let me repeat this back because this is an important one for me to understand. Before you even do studies and such, you’re starting with a set of metrics that you’re looking to move. They are usability metrics tied to the company’s business goals. You can then design the research to make sure that you are addressing those things. And then after the fact, you can look back at those metrics and see if you’re moving them. But it’s all from having that framework at the start before you start doing it.

As opposed to what I see a lot of companies doing, which is after the research, they then go back and try to demonstrate ad hoc what the value was. But it’s a lot easier if you start with knowing how you’re going to look at that.

A. Exactly.

Q. Along the route, as you were doing all of this stuff and making these changes, were there particular barriers that you ran into a lot? What were the biggest problems? And how do you overcome those things?

A. We moved really fast. I think the biggest challenge is always the process of getting stakeholder buy-in. Sometimes you can’t always get everyone on board and people can be really resistant. It can be a culture change.

Most companies are structured where product owners own a certain app or product and they don’t think holistically about what the user thinks of their tasks. So for example, another company has a framework called product excellence or critical user journeys. And the critical user journey could be something like I want to buy a crib. And so the steps that a user could do is go to a search engine, do a search, and then go into images, check on different images, and then go to shopping and open different results. And then they go and eventually buy a crib from Wayfair, for example.

But from the business owner’s point of view, that’s five different products. That’s web search, that’s image search, that’s shopping search, and then that’s like maybe even a web browser team is involved..

So as a researcher, you’re going to have to talk to each of those teams. And of course those people all think very siloed. They’re like, “I only work on image search, so I don’t want to change that.”

So that’s the challenge as a researcher, right?

It’s the same thing here where we go in and we’re like, “We saw this problem with this process” and this team we’ll talk to will say, “We are just on this one tiny app that’s part of this process, but that’s another team’s problem.” And we have to work with them and convince them.

What’s been really useful from usability testing is because when we analyze the task success paths, it’s not just about whether they were successful or not, which we definitely see, but where did they go instead of the success path we thought they would go to? That actually indicates their mental model.

So that’s where we share with the teams, “We believe they think this about sales, but actually they think to go here.”

And you know, sometimes stakeholders get angry about that. Like sometimes they’re very resistant, “that’s another team’s responsibility.” And then we have to be like, “No, this is actually an interesting insight that may help you discover a feature that maybe you should include in your app that you didn’t think about before.”

It’s always a challenge with research. Sometimes we feel like we’re bringing bad news, but I think that’s where we can drive a lot of change.

Q. It’s hard for me to picture a designer who’s assigned to one project being able to run with that level of cross-project analysis and negotiation. Is this the whole reason why you want to empower designers to do the evaluative projects, because constructing something like that and persuading people about it is a heavy engagement that requires a researcher, a really deep intellectual project on a whole bunch of different levels?

A. Yes. It’s our job to work with those teams and ultimately improve the product experience.

Q. You mentioned earlier that people wanted to access old research more easily. What did you do to make that easier? Did you buy one of the research hubs or something like that?

A. It is just a part of our wiki, I set up a thing where it has the research title and the link to the plan and the name and some description and some keywords. Our wiki does have some way to search that. But it’s unfortunately still pretty manual. We also have a newsletter that we send out with links to our research.

I do think it’s good that we’ve built it. We reference it a lot when people ask us questions, so it’s been useful.

Photo by Krivec Ales on Pexels.

The opinions expressed in this publication are those of the authors. They do not necessarily reflect the opinions or views of UserTesting or its affiliates.

Table of contents

- Move away from “check box” research

- The importance of usability metrics

- Measurable usability goals drive adoption

- When and how to constrain the non-researchers

- How to help them: templates for tests, templates for results

- Training vs. constraining non-researchers

- Company culture and support from management accelerate adoption

- Don’t measure the success of research, measure the attainment of usability-linked business goals